WORD-MADE MAGIC

What "text to image" really means

Many AI models today have an ability to generate a visual image based on a written description provided by the user. This generative function is called "text to image." It's nothing short of magic, when our words turn into the exact picture we had in our mind. When we first try to generate images with AI,however, chances are, we are bitterly disappointed by what emerge on their screen. Then, the majority of us would choke it up to AI's imitations. Afterall, what does machine know about creativity? AI image generation certainly has its limitations, but the vast majority of rooky disappointment has to do with us, human users.

AI model interprets keywords in the prompt to produce an image. When the model is updated, outcomes of the same prompt can be drastically different.

The first obstacle that we encounter - unless we are artists in our own light already - is that we are not used to the freedom of creating things based on our own ideas and visions. When we sit in front of a blank screen and are urged to type the words to explain what we want to see, we suddenly realize we have no idea what to say. As the text-to-image AI of our choice patiently awaits our command, the pressure rises, and our brain keeps turning and turning trying to find something - anything - we can tell AI to paint or draw. In this moment of desperation, many of us fall back on something familiar - the character from the anime we watched the night before, the pet sitting next to us, the Thanksgiving dinner scene in the TV commercial running in front of us. That's exactly what happened when I start experimenting with DALL-E (read more about my story).

Once we decide what image to create, we encounter the second challenge: we are woefully out of our depth when it comes to describing with words what we see in our eyes or minds. Say, we are looking at a beautiful beetle in the garden. Can we identify the hex code for the colors we perceive? Do we have the words to describe the graceful curb of the insect's body and the leaf? What about the lighting and the composition? Unless we at least dabbled in studio art, graphic design, filmmaking, etc., we don't even know how to answer these questions.

Then, the third and perhaps the biggest challenge of all: learning the "AI speak," a.k.a. "prompt engineering." The generative AI models we see today are the masters of "natural language;" they can understand and respond to commands ("prompts") given by human users in their language. But make no mistake: "speaking like human" does not equal "thinking like human." No matter how human-like they sound, the intelligence behind chatbots like OpenAI's ChatGPT is definitely different than human intelligence in some crucial ways.

Let's think for a moment about our daily interaction with non-human intelligences, like my cat, Lima, who has quite a sophisticated understanding of human languages of both verbal and silent kinds. Yet, my messages are often ignored, or her response defies my limited human comprehension. Because of my care and affection for her, I have learned to modify my behavior (enticing instead of yelling) and adjust expectations (a cat is a cat is a cat). In our interactions with the intelligence of an artificial kind, we need to do the same.

Naturally, it's up to each of us to decide whether to invest in communicating with a cat or an artificial intelligence. Especially for the latter, our decision rests on whether we believe AI has a potential of enriching our lives somehow. Such was the case for me and text-to-image AI models, which offers a continuous challenge that is at once artistic and technical. As the models evolve (and rapidly), I need to also update my prompting skills.

Human-AI interaction revolves overtime, and image generation is no exception. Once we begin to get a sense of how to prompt for an image, the vast world opens up to explore. Try different art styles, media, color palettes, compositions, lighting...and any possible combination of these elements are up for us to try and experiment with.

AI technology has been advancing at a neck-breaking speed for the last few years, and a prompt that worked fabulously a week ago might suddenly become useless when the model update happens or an entirely new model is released. So we'll always be on a learning curb, experimenting and reworking our toolkit.

Recent Creations

Images on this page were chosen from hundreds of images I generated between January - August, 2025, using several text-to-image setups: DALL-E 3 via ChatGPT, DALL-E 2 and 3 via Bing Image Creator, Imagen 2 and 3, GPT 4o native image generation, GPT 5 native image generation, and most recently, Gemini 2.5 Flash native image generation.

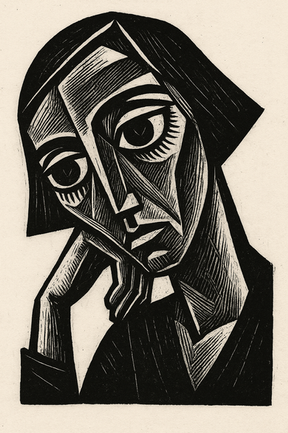

The cover image at the top of this page represents a moment in my personal history with AI generated art. After a couple of years using DALL-E, I became quite good at writing prompts to mix seemingly disparate art styles to create a compelling fusion style. I was accessing DALL-E 3 via Bing Image Creator, which limited the user prompt to 400 characters. I came up with a specific combination of art terms to consistently create this type of image, including "ukiyo-e" (Japanese woodblock print), "Gustav Klimt" (early 20th century artist associated with Art Nouveau and Symbolism), and "Noir style lighting". AI would interpret my rather cryptic prompt and combine characteristics from a variety of art styles in the best possible way. I produced a few dozen images in this style over several months. Then, a significant model change happened, drastically changing the way AI create the fusion of the art styles. I tried to modify my prompt but the new model's interpretation of the fusion style was so different that I couldn't reproduce the same effect.

The image gallery (right, bottom) represents a range of styles and techniques I worked on during those months: prints, photography, oil painting, anime and claymation, Japanese art, etc. Click on an image to open up the description of how each image is created.

Collaborative Creativity

There has been a heated debate over whether AI-generated images can be considered "art." In early days (say, up to 2023), the predominant assumption was that AI-generated images were simply the replication of learned visual patterns by AI without a hint of creativity, whether artificial or human. Many artists and creators spoke up against the infringement upon their rights, as these AI models were trained largely with unlicensed visual data with no compensation to human creators.

The latter question is being worked out in the legal system as we speak, and some entities (like Adobe) have been moving toward licensed data to train their AI models. The "art" question, on the other hand, is worked out in the creative process itself, as an increasing number of artists and creators, who are integrating generative AI as part of their creative work and finding new ways of creating art.

From my hobbyist's perspective, with little prior knowledge or training in creative arts, generative AI offers an unprecedented opportunity for creative expression, especially for those who were previously excluded from creative outlets for social, economic, educational, and/or medical reasons. Having this new avenue for creative expression has - literally - changed my life.

AI image generation is an iterative process, in which I revise my prompts multiple times, see what image comes back, and tweak them again. I study art styles and techniques of past masters, seek insights from modern visual arts, and take inspirations from cultural traditions around the world, in much the same way any artists and creators would. Outcomes of this process is often unexpected and surprising - not just me, not just AI, but something interstitial, that which emerges from the interaction. It is this novel experience of creativity that keeps me excited about AI-generated art.